What is Automated Web Scraping? Complete Guide for 2026

In 2026, automated web scraping has evolved from a technical niche into essential business infrastructure. With 67% of organizations now leveraging automated web scraping as core data operations and the market projected to reach $8.5 billion by 2032, understanding this technology is no longer optional—it's competitive necessity.

Yet many businesses still collect competitor data manually, unaware that automated solutions can extract pricing, product details, and market intelligence 30-40% faster than traditional methods. The barrier to entry has dropped dramatically: no-code platforms now enable non-technical teams to build sophisticated data pipelines in minutes rather than weeks.

This guide explains what automated web scraping is in 2026, how it works without coding knowledge, and why leading retailers depend on it for real-time competitive intelligence.

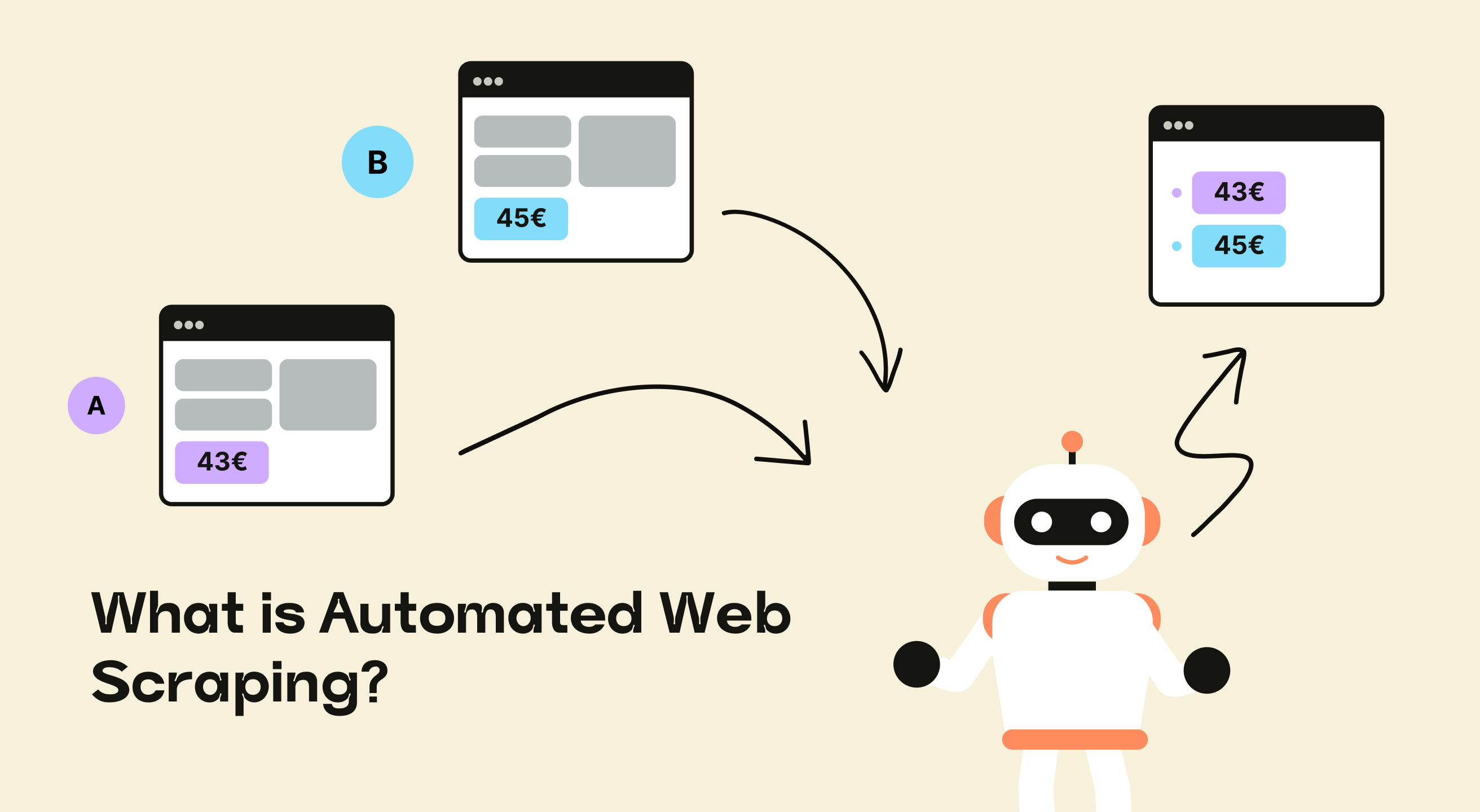

What Is Automated Web Scraping?

Automated web scraping is software that extracts data from websites systematically and continuously without human intervention. Unlike one-time manual data collection, automated scrapers run on schedules—hourly, daily, or in real-time—to monitor changes in pricing, inventory, product descriptions, and competitor strategies across hundreds or thousands of pages.

The technology handles everything traditional manual collection cannot scale to address: detecting when websites update content, adapting to layout changes, managing anti-bot protections, and delivering clean structured data ready for analysis. According to Apify's 2026 State of Web Scraping Report, 49-51% of all internet traffic now comes from bots, with automated data collection becoming one of the core engines powering modern business intelligence.

Modern scraping platforms have democratized access to web data. What once required engineering teams and weeks of development now works through visual interfaces where users point and click to define what data they need. This shift has moved web scraping from IT departments into the hands of pricing analysts, marketing teams, and operations managers who need competitive data to make daily decisions.

How Automated Web Scraping Works (No Code Required)

The 2026 generation of web scraping tools requires zero programming knowledge. These platforms use visual selectors and AI-powered detection to identify data automatically, making extraction accessible to anyone who can navigate a website.

Point-and-Click Data Selection

Modern no-code scrapers work through browser extensions or cloud platforms where users simply click on website elements they want to extract. The tool recognizes patterns—if you click on a product price, it identifies all other prices on the page and across similar pages automatically.

Platforms like ScrapeWise.ai, Browse AI, and Octoparse have invested heavily in this visual training approach. According to industry benchmarks, teams using these tools report 3-5x faster time-to-value compared to building custom scraping solutions from scratch.

Scheduled Automation

Once configured, scrapers run automatically on schedules you define:

- Hourly monitoring for dynamic pricing and stock availability

- Daily updates for product catalogs and inventory changes

- Real-time extraction for time-sensitive competitive intelligence

- Event-triggered scraping when specific conditions occur (price drops, new product launches)

This continuous operation transforms web scraping from a one-time data pull into an always-on intelligence system that keeps pace with market changes.

Structured Data Export

Automated scrapers deliver data in formats your systems already use:

- CSV/Excel for spreadsheet analysis and manual review

- JSON/XML for API integrations and database imports

- Google Sheets for collaborative team dashboards

- Direct database connections for enterprise data warehouses

The structured output means data arrives clean and ready for analysis rather than requiring hours of manual formatting and cleanup.

Built-In Anti-Detection

Professional scraping platforms handle the technical complexities that cause amateur scrapers to fail:

- Proxy rotation across residential and datacenter IPs to avoid blocks

- CAPTCHA solving through automated services and browser fingerprinting

- Rate limiting to respect website resources and avoid detection

- JavaScript rendering for modern single-page applications

These features, once requiring specialized engineering knowledge, now operate transparently in the background of managed scraping services.

Web Scraping vs API Integration: When to Use Each

APIs and web scraping serve different data access needs. Understanding when to use each approach determines whether you get the competitive intelligence you need or hit roadblocks.

When APIs Work Best

APIs excel when:

- The data provider offers official API access

- You need real-time updates pushed to your systems

- Data structures are stable and well-documented

- You're accessing your own platform's data or partner integrations

Many SaaS platforms, payment processors, and social networks provide robust APIs. If an API exists for your data source and meets your needs, it's usually the preferred approach.

When Web Scraping Is Essential

Web scraping becomes necessary when:

- No API exists — Most competitor websites don't offer APIs to access their pricing and product data

- API limitations restrict needed data — Official APIs often expose only a subset of publicly visible information

- Real-time competitor monitoring — APIs rarely provide access to competitor business intelligence

- Cost constraints — API access can be expensive or require business partnerships; public web data is accessible to everyone

- Flexibility requirements — Scraping allows you to define exactly what data you extract rather than accepting API-imposed structures

According to the 2026 Apify Report, 65.8% of scraping professionals increased proxy usage and 62.5% increased infrastructure expenses, driven largely by the need to access data that APIs don't provide. This investment reflects the strategic value of competitive intelligence that only web scraping can deliver.

The Hybrid Approach

Leading data teams use both methods strategically. They rely on APIs for internal systems and official partnerships while using web scraping for competitive market intelligence. This combination delivers comprehensive data coverage without artificial limitations.

Is Automated Web Scraping Legal? (EU & Global Compliance)

Web scraping legality is one of the most frequently asked questions, particularly as compliance frameworks tighten globally in 2026.

Legal in the EU for Public Data

Yes, automated web scraping is legal in the EU when practiced responsibly. The EU's Digital Markets Act and recent court precedents establish that publicly accessible data—information visible without logging in or bypassing paywalls—can be collected for business purposes.

Key principles for EU compliance:

- Public data only — Information visible to any website visitor without authentication is generally scrapable

- Respect robots.txt — Honor website directives about automated access

- No personal data without consent — GDPR applies to any personally identifiable information; avoid collecting it unless you have legal basis

- Fair use practices — Don't overload websites with excessive requests that could disrupt their operations

The EU AI Act, now in effect as of 2026, requires transparency about how AI models are trained on scraped data. Organizations using web scraping to train machine learning systems must maintain data lineage records showing where content originated.

Global Compliance Considerations

Beyond the EU, web scraping compliance varies by jurisdiction:

- United States — Generally legal for public data under the Computer Fraud and Abuse Act (CFAA), with important exceptions around terms of service violations and anti-circumvention

- CCPA (California) — Requires careful handling of any consumer data collected from California residents

- Australia — Similar to EU frameworks with emphasis on fair use and respecting website terms

The 2026 regulatory landscape is converging toward "bot disclosure mandates" where crawlers may need to identify themselves, and "rate-limit governance" tied to fair use principles. Forward-thinking scraping providers are already implementing transparency logs and adaptive request pacing to stay ahead of these requirements.

Ethical Scraping Best Practices

Beyond legal compliance, ethical scraping matters for long-term sustainability:

- Use reasonable rate limits (Apify's 2026 data shows 58.3% increased proxy spending year-over-year partly to implement polite crawling)

- Identify your bot with accurate user agent strings

- Collect only the data you actually need for legitimate business purposes

- Store and process scraped data securely with appropriate access controls

Platforms like ScrapeWise.ai build compliance into their infrastructure, handling rate limiting, geo-compliance, and data governance automatically so teams can focus on insights rather than legal risk management.

Common Automated Web Scraping Mistakes (And How to Avoid Them)

Even with no-code tools, certain pitfalls cause scraping projects to fail or deliver poor results. Recognizing these mistakes early saves time and improves data quality.

1. Scraping the Wrong Pages or Elements

The Problem: Teams often scrape category pages when they need individual product details, or extract promotional text thinking it's the actual price.

The Solution: Before automating, manually verify you're targeting the exact pages and data fields you need. Test with 5-10 examples to confirm the scraper captures what you expect. Most platforms offer preview modes—use them.

2. Failing to Handle Pagination and Infinite Scroll

The Problem: Modern websites split product catalogs across multiple pages or use infinite scroll. Scrapers that don't account for this miss 80-90% of available data.

The Solution: Configure your scraper to follow "Next" buttons or trigger scroll events. Most no-code platforms include pagination detection, but you need to enable it. For infinite scroll sites, tools like Playwright and Selenium (used by 26.1% of professionals according to 2026 data) handle JavaScript-triggered loading automatically.

3. Ignoring Website Structure Changes

The Problem: Websites redesign layouts constantly. Traditional scrapers break when HTML structure changes, requiring manual fixes that consume 80% of maintenance time.

The Solution: Use platforms with AI-powered self-healing capabilities. According to research testing 3,000 pages across major e-commerce sites, AI methods maintained 98.4% accuracy even when page structures changed completely. ScrapeWise.ai and similar services monitor extraction accuracy and regenerate code automatically when failures occur.

4. Overloading Target Sites with Requests

The Problem: Aggressive scraping triggers anti-bot protections. Sites detect unusual request patterns and block your IP addresses, causing data collection to fail.

The Solution: Implement rate limiting (typically 1-3 requests per second for most sites) and use rotating proxies. Professional scraping infrastructure distributes requests across residential IP networks that appear as normal user traffic. The 2026 Apify report shows 65.8% of professionals increased proxy usage to maintain access as anti-bot systems became more sophisticated.

5. Not Validating Data Quality

The Problem: Scrapers may extract data successfully but capture wrong values—mixing up prices with SKU numbers, or grabbing promotional text instead of actual product descriptions. Bad data at scale leads to catastrophically wrong business decisions.

The Solution: Implement automated quality checks that validate against expected schemas. Look for confidence scoring features that flag low-quality extractions for manual review. According to 2026 industry analysis, data quality validation—ensuring accuracy, freshness, and consistency—is now considered a core pillar of scraping success, not an afterthought.

6. Exporting Unstructured or Messy Data

The Problem: Raw scraping output often contains HTML tags, inconsistent formatting, duplicate entries, and missing fields. Cleaning this manually wastes hours that should be spent on analysis.

The Solution: Use scrapers that normalize data during extraction. Configure field types (text, number, date, URL) so the platform handles formatting automatically. Export to structured formats like CSV or JSON with defined schemas rather than dumping everything into plain text files.

7. Neglecting to Download Product Images

The Problem: For e-commerce and product intelligence, images are often as valuable as text data. Many basic scrapers skip images by default because they require additional processing.

The Solution: Enable image extraction in your scraper configuration. Modern platforms can download product images, store them in cloud buckets, and link them to your structured data records. This creates complete product intelligence packages rather than text-only datasets.

8. Violating Terms of Service or Scraping Private Data

The Problem: Scraping authenticated content, bypassing paywalls, or collecting personal information without consent creates legal liability and ethical concerns.

The Solution: Limit scraping to public data visible without login. Avoid collecting names, email addresses, or other personal identifiers unless you have explicit legal justification. Review and respect robots.txt directives. When in doubt, consult legal counsel—especially for regulated industries like healthcare or finance.

How Retailers Use Automated Web Scraping for Competitive Intelligence

Retailers have become the largest adopters of automated web scraping, using it to transform how they compete in fast-moving markets. With e-commerce being one of the most-targeted website types for scraping in 2026, understanding these use cases reveals why data-driven pricing has become table stakes.

Real-Time Competitor Price Monitoring

The foundation of competitive intelligence is knowing what your competitors charge right now, not last week. Automated scrapers monitor competitor pricing continuously across:

- Multiple geographic markets — Prices often vary by region; scrapers track them all simultaneously

- Different sales channels — Competitors may price differently on their website, Amazon, eBay, and other marketplaces

- Time-based fluctuations — Dynamic pricing changes throughout the day based on demand, inventory, and competitor moves

According to 2026 market research, retailers using AI-driven extraction improved demand forecasting accuracy by 23% and cut stock-outs by 35%, saving approximately $1.1 million annually. This performance comes from having accurate, current pricing data feeding into pricing algorithms and inventory systems.

Promotional Campaign Intelligence

Understanding competitor promotional strategies provides tactical advantages:

- Discount frequency — How often do competitors run sales?

- Discount depth — What percentage reductions do they offer?

- Promotional timing — When do sales start and end? Are they coordinated with holidays or inventory cycles?

- Promotional mechanics — Buy-one-get-one offers, bundle discounts, or percentage-off deals?

Scraping promotional banners, discount tags, and special offer messaging reveals patterns that inform your own campaign calendar and discount strategies.

Automated Price Change Alerts

Speed matters in competitive retail. Automated systems send immediate notifications when:

- Competitors drop prices below your current levels

- New products launch in your category

- Inventory goes out of stock (signaling demand or supply constraints)

- Premium positioning opportunities appear (competitors raising prices)

These alerts enable rapid response. Rather than discovering price changes during weekly manual checks, teams learn within minutes and can adjust pricing, reorder inventory, or modify marketing spend before losing market share.

Category-Level Pricing Analysis

Individual product tracking is valuable, but category-level analysis reveals broader strategic patterns:

- Price architecture — How do competitors structure pricing across economy, mid-range, and premium tiers?

- Private label positioning — Where do store brands sit relative to national brands?

- Cross-category pricing — Do competitors use loss leaders in certain categories to drive traffic?

- Seasonal pricing patterns — How do prices shift across quarters and seasons?

Scraping entire categories—dairy products, consumer electronics, furniture—enables this strategic view. You can identify overpriced SKUs, pricing gaps where new products could fit, and opportunities to gain margin without sacrificing competitiveness.

Private Label vs Branded Product Comparison

For retailers with private label offerings, understanding how store brands compare to national brands is critical:

- Value gap analysis — Is your private label priced appropriately below the branded alternative?

- Quality perception — Do product descriptions and reviews suggest private labels deliver comparable quality?

- Assortment gaps — Where do competitors offer private labels that you don't?

This intelligence guides private label development, pricing strategy, and marketing messaging. Retailers that get private label positioning right can significantly improve gross margins while maintaining customer loyalty.

Market Share and Assortment Intelligence

Beyond pricing, scraping reveals what competitors carry:

- SKU count — How many products do competitors offer in each category?

- Brand representation — Which brands are they prioritizing or excluding?

- New product adoption speed — How quickly do they add newly launched products?

- Out-of-stock patterns — Which products consistently sell out, indicating high demand?

This assortment data helps merchandising teams identify category expansion opportunities, negotiate better terms with suppliers (by demonstrating competitor relationships), and optimize inventory depth.

The Technology Behind Automated Web Scraping in 2026

Understanding what powers modern scraping platforms helps evaluate solutions and set realistic expectations.

Programming Languages and Frameworks

According to the 2026 Apify infrastructure report, 71.7% of web scraping professionals use Python, making it the dominant language for scraping operations. JavaScript follows at 17%, with C# and Go trailing behind.

Popular frameworks include:

- Scrapy — High-performance framework for large-scale scraping

- Playwright and Puppeteer — Browser automation for JavaScript-heavy sites (26.1% usage)

- Selenium — Veteran browser automation tool (26.1% usage)

- BeautifulSoup — Simple HTML parsing for smaller projects (43.5% usage)

However, no-code platforms abstract these technical choices away. Users interact with visual interfaces while the platform handles framework selection and optimization automatically.

Cloud Infrastructure and Scalability

62.5% of scraping professionals increased infrastructure expenses in 2026, driven by the need to scale operations and handle more sophisticated anti-bot systems. Cloud-based solutions now dominate because they:

- Scale to millions of requests without local hardware limitations

- Provide geographic distribution across multiple data centers

- Enable real-time or near-real-time scraping at enterprise scale

- Offer managed services that handle updates, security, and compliance

This infrastructure investment reflects scraping's evolution from experimental side project to mission-critical business capability.

AI-Powered Adaptation

The biggest technology shift in 2026 is AI integration. Rather than breaking when websites change, AI-powered scrapers:

- Detect layout changes automatically through computer vision analysis

- Regenerate extraction code using machine learning models trained on millions of pages

- Understand semantic meaning of page elements rather than relying on fixed HTML selectors

- Handle edge cases that rule-based systems miss

According to 2026 statistics, AI-powered scraping delivers 30-40% faster data extraction times and achieves accuracy rates up to 99.5% on dynamic, JavaScript-heavy websites. This performance advantage is why 63.6% of AI scraping users now employ AI for code generation while 72.7% report productivity improvements.

Proxy Networks and Anti-Bot Evasion

As anti-bot systems become more sophisticated—analyzing browser fingerprints, mouse movements, and request patterns in real-time—scraping infrastructure has evolved to match:

- Residential proxies route requests through real user IP addresses, appearing as normal consumer traffic

- Rotating proxy pools distribute requests across thousands of IPs to avoid pattern detection

- Session management maintains consistent browser state across requests

- CAPTCHA solving services automatically handle challenges using AI and human-verification hybrid approaches

The 2026 data showing 65.8% increased proxy usage reflects the arms race between scrapers and anti-bot systems. Professional platforms invest heavily in these capabilities so users don't have to.

Choosing the Right Automated Web Scraping Solution

With dozens of platforms available in 2026, selecting the right tool depends on your specific use case, technical resources, and data requirements.

For Non-Technical Teams: No-Code Platforms

Best for: Marketing teams, pricing analysts, operations managers who need data without IT dependencies

Top Options:

- Browse AI — Visual robot training with 770,000+ users; prebuilt robots for common sites

- Octoparse — AI-assisted field detection with preset templates for popular websites

- ScrapeWise.ai — Managed infrastructure specifically for e-commerce competitive intelligence

Key Features to Look For:

- Visual point-and-click interface for defining extraction rules

- Preset templates for common websites (Amazon, eBay, retail sites)

- Scheduling and alert capabilities for automated monitoring

- Direct export to Excel, Google Sheets, or business intelligence tools

For Developers: API-First Solutions

Best for: Engineering teams building custom data pipelines and integrations

Top Options:

- ScrapeWise.ai — Managed scraping API with built-in proxy management and anti-bot evasion

- ScrapingBee — AI-powered API handling JavaScript rendering and data parsing automatically

- Oxylabs — Enterprise-grade extraction with 175 million IPs across 195 countries

Key Features to Look For:

- RESTful API with clear documentation

- Webhook support for real-time data delivery

- Custom header and authentication handling

- Comprehensive error handling and retry logic

For Custom Projects: Open-Source Frameworks

Best for: Teams with specific requirements not met by commercial platforms

Top Options:

- Crawl4AI and Firecrawl — Zero-shot extraction using vision-language models; ideal for feeding LLMs

- Playwright + Browserless — Managed browser automation infrastructure

- Scrapy + Scrapoxy — High-performance framework with proxy rotation

Key Features to Look For:

- Active community and documentation

- Plugin ecosystem for common use cases

- Performance at scale (thousands of requests per second)

- Cloud deployment capabilities

Evaluation Criteria

When comparing scraping solutions, prioritize:

- Reliability — Does it handle site changes automatically or require constant maintenance?

- Anti-bot evasion — Can it access sites with sophisticated protection systems?

- Data quality — Does it validate output and provide confidence scoring?

- Compliance — Does it support rate limiting, geo-restrictions, and legal requirements?

- Support — Are there experts available to help when issues arise?

- Cost structure — How does pricing scale with your data volume?

According to Neil Patel's 2026 automation research, companies using managed scraping platforms report 3-5x faster time-to-value compared to building custom solutions, making the build-vs-buy decision straightforward for most organizations.

Conclusion: Automated Web Scraping as Competitive Advantage

Automated web scraping has graduated from technical tool to strategic capability. In markets where competitors monitor pricing in real-time and adjust dynamically, manual data collection creates structural disadvantages that compound daily.

The 2026 landscape shows clear momentum: 67% of organizations now use automated scraping as core infrastructure, the market grows at 14.7% CAGR toward $8.5 billion by 2032, and AI-powered tools deliver 30-40% productivity gains over traditional methods. These numbers reflect scraping's evolution into essential business intelligence infrastructure.

For retailers, e-commerce brands, and pricing strategists, the question isn't whether to adopt automated scraping—it's how quickly to implement it before competitors gain insurmountable intelligence advantages. Start with high-value use cases like competitor price monitoring, use compliant managed platforms to minimize technical overhead, and scale as you prove ROI.

Modern no-code platforms have eliminated the barriers. You don't need developers, you don't need infrastructure expertise, and you don't need weeks of setup. Define what data you need, configure your scraper, and start receiving competitive intelligence that drives smarter, faster, more profitable decisions.

The businesses winning in 2026's fast-moving markets are the ones treating web data as strategic infrastructure, not an occasional research project. Automated web scraping makes that transformation possible.